Oops, wish I had checked the forums before hitting render button.

To not bury the lead, here are the results on the 240fps video (I was able to mostly fix the flickering):

...

I think this is easily the best result yet. The high frame rate makes the motion easy to track. I also implemented the interpolation and smoothing on the 3D inference that I had mentioned earlier.

Nice! I agree that this looks better. It's doing an impressive job inferring how each arm is moving as it goes behind the body. The only areas I see it struggling are (1) tracking the throwing wrist as it's roughly directly back behind me/away from the camera - the depth gets confused for a moment and similarly (2) as the front leg shin angle goes vertical, it appears to have some trouble tracking the leading knee and hip joint in the depth dimension away from the camera. I think the extent to which it's worth time fixing things like that depends on the goal.

Potential goals.

1)

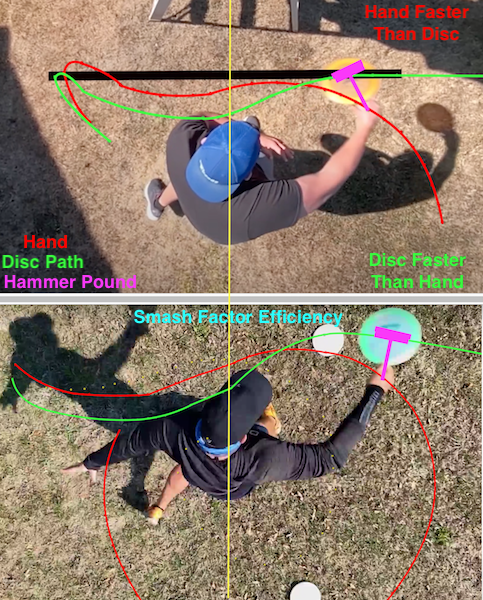

Academic. Getting a skeletonized motion model in 3D can provide an abstraction of the core throwing motions and a basis for comparing motion patterns & their outcomes (disc release speed, "smash factor" or whatever one is interested in). This approach is going to miss important details of the biology and the Center of Gravity, but is still useful in the big picture. Maybe a CoG could be inferred to some degree of precision, but that's tricky because we all carry mass differently on our skeletons or abstract wireframes.

Empirically, these could be powerful tools to potentially show players (1) what people have in common in top form (I think most of it is written in DGCR, but these wireframes make some of it much easier to see and (2) how changes or potential changes could impact speed & consistency.

2)

Coaching. To me, these 2D and 3D wireframes illustrate all kinds of things I could

sorta see, but were really hard to pick out until recently. I think beginners lack the conceptual eye and these wireframes can help. They make a few things really obvious, including the way the whole

body rocks and swings and shifts (look at the spine and pelvis in the wireframes), the fundamental lateral shift and how the pelvis moves in the context of that shift, the way the plant and movement through braced legs causes the tilted spiral and rotation into followthrough, and so on. With players absolutely drowning in information (much of it bad or redundant), these could help a coach "cut to the chase" about what the primary motion pattern looks like and get people focused on the big picture when they're swimming in details. The fundamental motion is remarkably similar across people. Pointing out how a change in a player's wireframe would make it more like a top pro's overall wireframe motion could be very powerful.

3)

Customization. Should you use a Gibson off arm or a GG off arm? Should you hop vertically or horizontally? Can you get a little more late acceleration with a Simon or a GG move into the pocket? I don't think wireframes like this will answer all of those questions (in the end, "just do it and see what happens" prevails), but the idea that you can started to see and think about what might work for your body is cool. And most good models allow you to specify alternate starting positions and dynamics to run theoretical experiments - maybe Player A finds it

really hard to do the GG off arm due to their learning history, but the model suggests they should bear with it if crushing is their goal. A model helps you think about changes in the context of that player's overall swing. Monday morning motivation/speculation.

Basically, the interpolation works like this: AlphaPose produces confidence values for the position of each joint in each frame. I removed all position data where the confidence value was lower than a set threshold and then did a simple linear interpolation between the remaining points. (There's definitely a smarter approach here than linear interpolation, but that's a problem for future me to deal with.) I previously had this working in the 2D version only, but now the 3D version has it too. Also, I added the ability to manually remove and then interpolate over specific problematic frames.

This really does seem to work well overall without a fancier interpolation. I guess if a different one rescues a couple of the minor issues that remain it might be worth it if it's not too expensive depending on the goal for this little project.

In addition to the frame rate, I think this is key for the model to work well. Having a reasonable amount of contrast between the thrower and background is essential.

Assuming good contrast, my guess for the minimum requirements for a decent result is 480p/60fps. But probably want something like 720p/120fps to be safe.

Makes sense, just by eye the 120fps with good background contrast still certainly minimized the blurring, though I'd be curious when it struggles for a big arm. Even in my 240 fps video there's a point where it starts to blur a bit moving through the hit/release. But pragmatically as long as it's good enough to not botch the wrist tracking it's probably ok. For my money I'd say the fidelity of the wrist tracking and inferring release speed (if possible, though a radar gun is probably best in any case) are important. Tracking the nose coming around is a compromise between the two for academic purposes. For coaching purposes, just knowing how fast the dang thing came out may prevail to most people.

Anyway, how we feeling about this? I'm happy to provide the raw video files, data, tinker with how things are displayed, or do whatever else people find helpful. The code is unfortunately a complete mess. But, whenever I get good chunk of time, I can try to clean it up such that others could use it.

I mean I'm just giddy, it's super cool and illustrates many things we've read about here in real time. I think most players want more speed/consistency when they get serious about form work, so the ability to yoke the wireframes to that is important. I'm assuming that the ability to identify changes that cause better lagged acceleration that you can see in the timecourses is the main important idea. That's because if the fundamental motion pattern is good and creating a nice, lagged acceleration spike with a sudden late peak moving through the wrist, then the battle is about making that spike process better, and learning to add more momentum and shift coming into the swing. I'm kind of bummed that it seems hard to get the late rotational aspect of GG or Simon's forearm supinating into the hit which is important to the ejection speed, but it's hard to get it all!

I'm also not sure we've solved the problem of the "best" swing for each player, and that's where a major innovation could be.

For individual coaching, making it a useable package is already obviously appealing IMHO. Just having a few pro examples to compare to is already outstanding